Student skill building

The idea here is to help students master course concepts to a deep level by applying them in a multi-stage project that is both a teaching method and assessment. It has both formative and summative evaluation aspects—that is, applied tasks that are submitted for feedback that is subsequently used to improve the next, more complex submission. It is designed with an outcomes-based approach in mind where the intent is for students to master knowledge and skills to a specified level, rather than being marked on a bell curve.

How iterative submissions with feedback helps students

This multi-stage submission, mix-of-formative-and-summative-evaluation approach helps students:

- Better understand how to apply concepts by comparing their performance with an ideal standard through feedback provided by an expert

- Develop critical thinking capacity that is broadly useful. They focus and plan projects, think strategically, and refine their plans and their understanding in light of new information

- Attain higher skill levels through repeated practice, without the potential boredom of repetition, because each part of the project is distinctively different

It can lead to a “meeting of the minds” between instructors and students on project requirements and how to meet them, something that research shows benefits students greatly and helps move them farther down the road towards developing the evaluative skills of their instructors (Juwah, 4). This, in turn, helps them become self-managing learners who will be more successful in future endeavours.

How iterative submissions with feedback helps instructors

This approach reduces the volume of feedback that would typically be provided in major course projects because less, but more impactful, feedback early on keeps projects on track and reduces the amount of correction needed at the final submission stage. Consider such feedback to be “feedforward” in the sense that it provides ongoing corrective focus (Bailey, 191).

It also helps instructors better understand what’s going on in the minds of their students, thus enabling them to refine their teaching methods to better support their students’ learning.

How it works for courses I’m familiar with

The major iterative submission course project with which I have the most direct personal experience is for a course in instructional design. Students are required to create and teach a lesson or module (or similar instruction-related item) in their area of expertise using instructional design principles and procedures. The iterative submissions mimic project deliverables used in industry, helping students develop skills useful in their instructional design practice. (Students are typically a mix of K-12 teachers, instructional designers from the e-learning industry, and training development officers from corporations, institutions, and the military.) Students learn the content and skills better because they are more actively involved in the process of creating. It also helps students think strategically about their work—to plan the entire project and weigh and connect its parts appropriately, while receiving feedback at early enough stages to refine it.

A second iterative submission course project, in which I’ve played only a support role, is student video projects. In this case, it involves nursing students making impactful public service announcements for kindergarten to grade 6 students and their families, with the goal of being so engaging as to increase the likelihood of behaviour change. The submission cycle in this case also follows typical milestone deliverables for production of a video, with the ultimate goal of giving our graduates an advantage in job interviews after graduation because of multimedia skills relevant to their potential jobs that they have developed at university.

Instructional module details

This project has three submissions, a few weeks apart, to allow for marking and opportunity to implement the feedback:

- Design Document (or Course Plan): project description and goals (overall and for each part)

- Detailed Design Document (an expanded Design Document with descriptions about the project parts and what will be done in each)

- Project Report (about the implementation, user feedback and lessons learned)

The first two are formative evaluation and the third is summative assessment. The latter is done in a context for which feedback may be received from peers and the instructor. Between the second and third submissions is an implementation and data collection phase, such as may happen when a new instructional module is pilot tested.

See the companion document, Course Project Details (.doc), for more information.

Students usually consult with the instructor about their selection of a project subject prior to their first submission but it is not a requirement.

Student video assignment details

Students are provided with a project description (.doc), checklist (.doc), and grading rubric (.doc). There is a university-staff-conducted orientation in the media lab where students will be editing their video projects. It includes an orientation to video and multimedia creation methods (including examples from previous years student work); and instruction in camera use, video shoot staging, editing software, and project storyboarding. More technically experienced students are asked to help demonstrate shooting and editing during this session. Students are taken to the Equipment Pool to see what equipment is available and the process for borrowing it.

A workshop is scheduled for a later date where initial project storyboards and/or initial video test shoot files are evaluated by peers, multimedia lab staff and the instructor. Times for individual group coaching on equipment use, video shoot staging, file editing, etc. by media lab staff are posted.

After the workshop, students refine their plans, create and edit the multimedia files, and upload them to the UNB video site, from which they are posted to a server and the links shared with the instructor.

Same topic, many learning activities

A variation on the above theme is something Carol Reimer, a UNB Nursing Instructor, uses for third year nursing students. Students pick a topic of interest that comes up in their clinical placement and do a series of individual and group activities on it throughout the course, receiving feedback on each one. A major goal of this approach is deep learning on a topic that is relevant to actual on-the-job nursing practice, a key to the achieving of which is students being personally invested in the topic because they selected it. The activities are:

- A Media Scan on the topic (a specialized form of annotated bibliography that includes opinions of the items cited and discussion of other students Media Scans)

- A Group Project that includes an opinion paper and class debate on the topic

- A Poster Presentation on the nursing implications of the issue covered in the Group Project, including assessment of conceptual depth, clarity , design effectiveness and engagement techniques

Key to success: crafting feedback students can use

Feedback should help students see the next steps and how to take them. Assessment criteria are useful only if students can understand and use them to improve their work. Likewise, feedback comments are useful only if they can be read, understood and taken to heart, and if students can connect comments to actions they can take to improve their work (Defeyter, 24).

Feedback that is delayed, overwhelming in quantity, too vague or general (keeping in mind that providing a recipe will also hinder learning—we want them to think and make their own responses), or not written from the student’s point of view as information they can use to “…troubleshoot their own performance and take action to close the gap between intent and effect” is not effective (Juwah, 10).

Where possible, provide feedback in multiple forms (e.g., spoken in person, audio or video online), not just written.

“Any model of feedback must take account of the way students make sense of, and use, feedback information” (Juwah, 4). More important is that students be able to compare actual performance with a standard and take action to close the gap. In order to do this, students must have some of the evaluative skills of their instructor. Instructors need to help students improve self-assessment skills. Monitoring progress towards goals involves a process of internal feedback where instructor and peer feedback is evaluated (along with their own) and the work (maybe even the goals and outcomes) is revised or refined.

Students who just do what they’re told by the instructor will not learn. Since the point of formative assessment is for students to develop the evaluative skills of their instructors, instructors should provide lots of self- and peer-assessment tasks. Typical tasks of this nature aim at helping students interpret standards or criteria meaningfully and make accurate judgments about how their work compares to these standards or criteria. They usually involve structured reflection and may be facilitated by asking students (Juwah, 7):

- What kind of feedback they would like

- To write their thoughts on the strengths and weaknesses of their work and include them with each work submission

- To set achievement milestones and reflect on progress at each of them (both looking back and forward from there)

- To write feedback on progress towards goals and outcomes on the work of some peers

Peer feedback

By commenting on the work of peers, students develop objectivity in their observations about work quality in relation to standards. This objectivity can then be applied to their own work, improving their internal feedback quality.

Peer feedback is provided more quickly than instructor feedback, and often there is more information. In providing peer feedback, students express publicly what they know and understand. The act of doing this helps students further develop their own understanding of the content. This also helps students develop conceptions of quality that are roughly equivalent to those of their instructors, which enables them to better interpret feedback from instructors, tutors, and peers (Liu, 287).

“Building students’ knowledge of how and why assessment takes the form it does, raising awareness of ongoing as well as final processes, teaching students how they can become self- and peer assessors, and revealing how critical thinking about assessment is an integral part of the learning process, should be a primary aim of all university tutors. Such aims can be achieved in a number of ways. Of most importance is the involvement of students in the rationale behind assessment practices” (Smyth, 369).

Improving feedback effectiveness

Feedback is best viewed as a dialogue rather than information transmission. Dialogue helps students refine their understanding of expectations and standards and get immediate response to misconceptions and challenges.

The following have been shown to improve student understanding of standards and criteria (Juwah, 9):

- Provide practical details with criteria statements, including performance standards (e.g., grading rubrics, standards sheets)

- Discuss criteria in class and ask for student input, refining the criteria afterwards. Students want to know and use the reasoning behind the criteria, so as to better understand them and relate them to their conceptions of their work quality (Juwah, 16)

- Provide worked examples with feedback in them

- Conduct an activity in which students collaborate with instructor and each other to develop their own assessment criteria for an assignment. This helps students develop conceptions of quality that are roughly equivalent to those of their instructors, which enables them to better interpret feedback from instructors, tutors, and peers (Liu, 287).

Some balance

So far we have been assuming that students are actively monitoring and regulating their performance levels with respect to their goals and the strategies used to achieve those goals. Perhaps not all students work in a goal-oriented way. Outcomes-based education is based, usually unreflectively, on a “pervasive techno-rationalism” (Bailey, 189) that sees learning as a scientific or engineering process with quantifiable steps and goals and one (or a few) optimum ways of achieving them.

Institutional practices such as outcomes/criteria statements and standardized feedback forms may make feedback more ineffectual, not less, if institutional conformity and uniformity are stressed at the expense of pedagogical clarity from the students’ point of view (Bailey, 195). Many instructors know that students do not relate well to officious language.

Feedback needs to be located in situ or properly referenced. Unreferenced comments on a cover sheet are difficult for students to apply (Bailey, 194).

And, for reference, Maryellen Weimer observes, “High achieving students tend to under-estimate their performance and those in low-achieving cohorts over estimate theirs. Low achieving students also have more difficulty learning to make accurate self-assessments.” One technique to improve student performance is to require students to self-assess prior to submission but to keep it private. “Students are more honest if they know the instructor giving the grade isn't going to see their self-assessment. Then the student considers both assessments, his own and the teacher's, and reflects on why they aren't the same.”

Examples

Good examples of student work (“high standard exemplars”) may be more effective in helping students focus on quality than criteria. Focusing on criteria leads to thinking of qualities of the end product and overlooks the overall quality, which consists of the way in which the criteria combine to create overall quality. Important aspects of overall quality are often missed in criteria lists and are expected but implicit (Liu, 288).

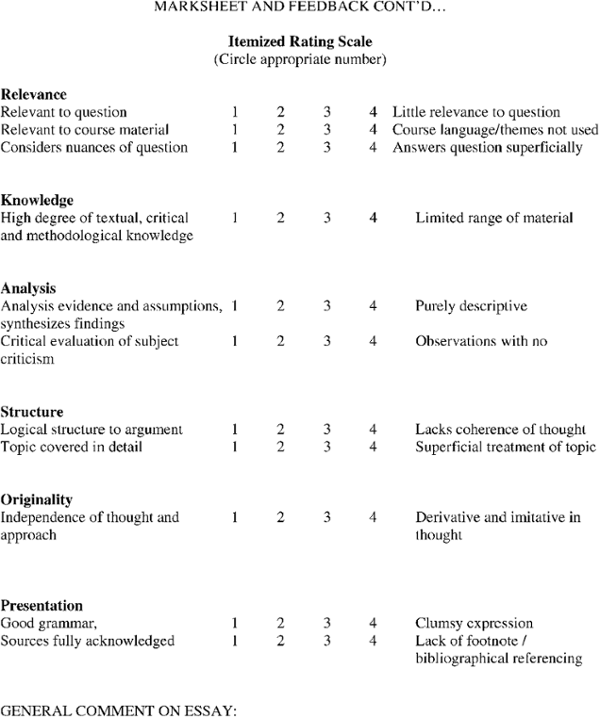

The example grading rubric below is for an English Literature essay. However, the format is quite broadly applicable. It is a combination of simple criterion statements and rating scales. In order to establish a meeting of the minds with students, it would be worthwhile to have a discussion about what is meant by each criterion, and perhaps tweak them based on student questions and feedback.

See the companion article, Feedback That Improves Student Performance for a more detailed discussion of feedback.

(Smyth, 378)

One exercise in fostering a meeting of the minds between the instructor(s) (and Teaching Assistants if applicable) on one side and students on the other by having both groups independently rank order by importance the assignment criteria, then compare notes and discuss, as Defeyter did (page 26):

| Students (N = 53) | Lecturers (N = 5) | ||

|---|---|---|---|

| Criterion | Rank | Criterion | Rank |

| Answer the question | 1st | Answer the question | 1st |

| Relevant information | 2nd | Understanding | 2nd |

| Argument | 3rd | Argument | 3rd |

| Structure/organisation | 4th | Relevant information | 4th |

| Understanding | 5th | Evaluation | 5th |

| Evaluation | 6th | Content/knowledge | 6th |

| Content/knowledge | 7th | Structure/organisation | 7th |

| Wide reading | 8th | Presentation/style | 8th |

| Presentation/style | 9th | Wide reading | 9th |

| English/spelling | 10th | English/spelling | 10th |

Tips for scaling up feedback for large classes

Typical for first and second undergraduate courses is the situation of large numbers of students and few, if any, teaching assistants. How can it be possible to provide sufficient feedback on iteratively submitted projects in that context?

Some kind of structured feedback forms will be almost certainly be necessary in large classes. Perhaps the above-described possible pitfalls of such forms could be ameliorated by such strategies as:

- Having structured break-out group discussions of feedback (the point is to help students come to a common understanding of the comments that also connects with the instructor’s understanding)

- Turn typical feedback items into class discussion questions and have students respond using clickers and see histograms of class responses which can be the subject of instructor-led, whole class discussion

- Have students create action points in class based on feedback they received and discuss them

Perhaps setting up autogenerated electronic feedback in Desire2Learn (e.g., rubrics, answer keys, FAQs) may help. Include typical actions points with the standardized feedback comments.

Set up in Desire2Learn rating scales by groups, where group members rate their own contributions in specified categories, rate others in the group, get rated by the rest of the group and the instructor, and then see an aggregate pictogram of those ratings.

Model strategies used to close common performance gaps through demonstration and worked examples.

References

Bailey, R. & Garner, M. (2010). Is the feedback in higher education worth the paper it is written on? Teachers’ reflections on their practices. Teaching in Higher Education, 15(2).

Defeyter, M. A. & McPartlin, P. L. (2007). Helping students understand essay marking criteria and feedback.Psychology Teaching Review, 13(1).

Juwah, C., Macfarlane-Dick, D., Matthew, B., Nicol, D., Ross, D., & Smith, B. (2004). Enhancing student learning through effective formative feedback. The Higher Education Academy, York, UK.

Liu, N. F. & Caress, D. (2006). Peer feedback: the learning element of peer assessment. Teaching in Higher Education, 11(3).

Smyth, K. (2004). The benefits of students learning about critical evaluation rather than being summatively judged.Assessment & Evaluation in Higher Education, 29(3).

Weimer, M. E. (2014). Developing Students’ Self-Assessment Skills. Faculty Focus, December 10.