Grading rubrics

Grading rubrics are essentially an attempt to quantify qualitative judgment by documenting observable and measurable aspects of student performance. It’s not perfect, but offers some advantages:

- Enabling student success by clearly showing what is expected and how student work will be evaluated.

- Helping students become better judges of the quality of their own work.

- Making assessment more objective and consistent.

- Reducing the time required for teachers to mark student work by automating repetitive tasks.

- Providing students with more informative feedback on both areas of strength and those in need of improvement, even for larger classes.

- Providing useful feedback on the effectiveness of the instruction.

(Anglin et al, p. 53)

Advantages and disadvantages of grading rubrics

The quality of student work is likely to increase, if they can view the rubric in advance of work submission, because they have a more detailed idea of what is required for an assignment (Anglin et al, p. 54).

A lot of the repetitive feedback you may give students is “automated”—you can simply point out one of the performance descriptions in the rubric. This could potentially save time, or make it possible to provide this level of detailed feedback to large classes. Or, you could use your evaluation time to focus more on individualized student feedback while pointing out the applicable “canned” feedback, thus greatly increasing the feedback value to students.

If students question a grade you gave, the rubric provides a detailed basis for the discussion. Instead of the discussion being, “I think I did A-level work” and the reply being “In my professional judgment, you did B-level work,” the student is more likely to say, “You rated me at performance level X, but I think my work was at this higher performance level Y.” The discussion then focuses on the student’s evidence (or at least argument) that the work is as described in the higher performance level description.

We need to keep in mind, however, that grading rubrics’ attempt to quantify what is largely a qualitative judgment is not possible in the pure theoretical sense. We’re doing it to provide specific guidance that will improve student performance, but it’s not perfect. The quantification attempt risks having students become process-focused, not ideas-focused: focusing on the mechanics of producing the assignment and missing out on the deeper learning that comes from engagement with ideas.

Once you produce a rubric for one assignment, it raises expectations that you will provide them for most or all assignments, projects, assessments, and learning activity participation.

While informing and guiding, evaluation rubrics can unintentionally constrain students, keeping them from the unexpected, creative, the more imaginative responses that come from less prescribed assignments.

However, keeping rubrics’ limitations in mind, you can craft and use them to maximum advantage while minimizing their disadvantages.

There are two main types of rubrics, Analytic and Holistic.

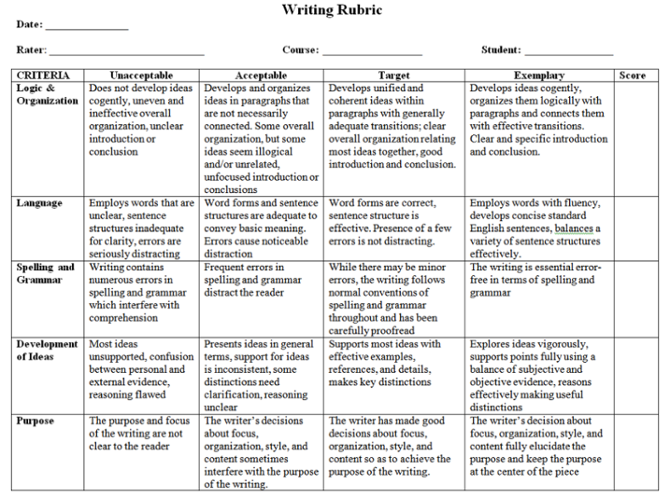

Analytic grading rubrics

Analytic rubrics have different levels of achievement of performance criteria. Each level for each criterion has a precise descriptor of what students should demonstrate that they know and can do, in as observable and measurable terms as possible. The criteria are linked to outcomes for project/course. See the example below.

The challenge is to create descriptors that are as free as possible from subjective words such as “good, better, best” and that instead describe what students should demonstrate, rather than what it is missing or not correct, especially for the lower performance levels.

Advantages and Disadvantages of Analytic Rubrics

An analytic grading rubric can be more complicated to administer because it has more detail and finer gradations of performance. An advantage, however, is that it is a more precise way of marking because you can mark each criterion independently and have different performance criteria for each performance level.

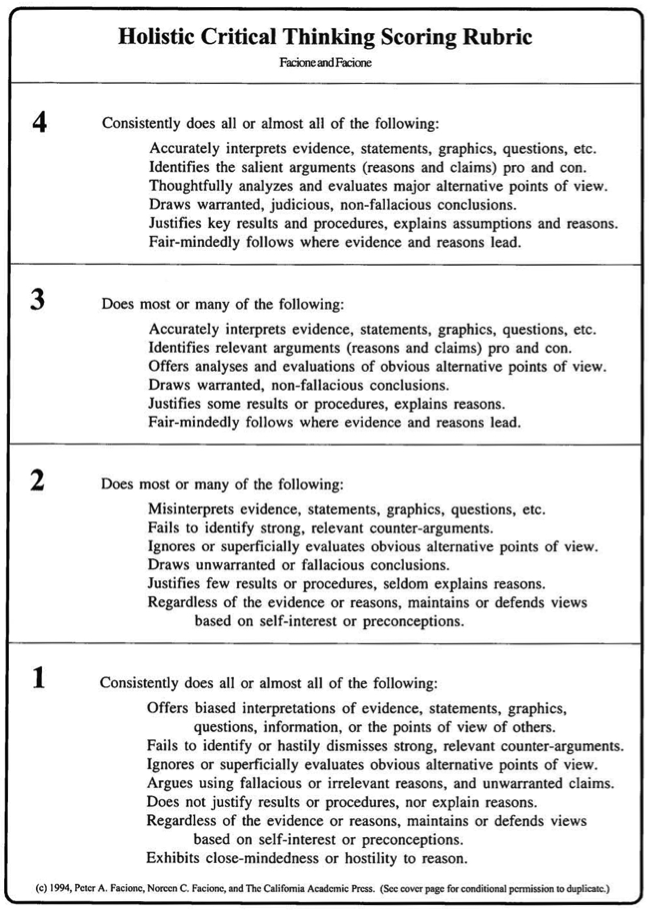

Holistic grading rubrics

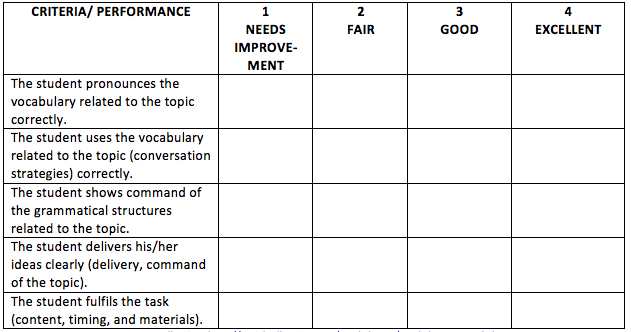

Holistic rubrics either put all the criteria descriptions together in one box for each performance level, as illustrated below, or just list the criteria, each with a rating scale of, say, 1 to 4.

Advantages and disadvantages of holistic rubrics

One advantage is that it seems simpler to use because holistic rubrics have less detail. The disadvantage is that evaluation using them is less precise, since any particular assignment is unlikely to be entirely like any one of the descriptors. Also, for the type that has rating scales such as the one below, it does not make explicit the reasons for the numerical rating you gave, which somewhat defeats the purpose of a grading rubric.

Tips for creating rubrics

- Decide what the learning outcomes of objectives for the assignment (or whatever the object of the rubric is). These become the basis of the criteria for evaluation that are typically at in the left-hand column of the rubric.

- Decide what to call the performance levels (poor, fair, good, excellent; unsatisfactory, satisfactory, above average, exceptional; below threshold, threshold, target, exemplary, etc.). You can get some ideas by asking other instructors in your department and/or searching online for rubrics in your subject area and year level. Use an even number of levels of performance on the scale (typically 4 or 6). When an odd number of levels are used, the middle level tends to become a catch-all category. With an even number of levels, raters have to make a more precise judgement about a performance when its quality is not at the top or bottom of the scale (University of Minnesota).

- Generate some raw content for performance descriptors. There are a plethora of possibilities:

- Find out how the real world defines quality for this item/brainstorm with experts, other instructors.

- Search online for rubrics in your subject area and year level.

- Draw on your own expertise and experience.

- Create quality criteria items based on an evidence-based quality definition for your subject area.

- Gather many samples of work of varying quality, student and expert. Sort work into 4-6 quality or performance categories (e.g., unsatisfactory, satisfactory, good, very good) and create knowledge and skills descriptions for each quality category and each quality criteria item, using the work as a guide.

- Refine your descriptions for each rubric cell to be specific to your assessment item, as observable and measurable as possible, and for each to stand on its own rather than be a list of what’s not present. Don’t have qualitative descriptors of each level (poor execution, acceptable execution, good execution, excellent execution—this defeats quantitative purpose by retaining undefined qualitative descriptors).

- Consider criteria weighting: are all criteria equally important, or some more important, time-intensive, etc. than the others?

- Are the levels you have created parallel? That is, are the criteria present in all levels? Is there continuity in the difference between the criteria for exceeds vs. meets, and meets vs. does not meet expectations?

- Ideally, test the rubric with a small group of students to further refine it from experience. Typically, though, you do your best and refine it from year to year based on experience in its use.

A radical idea: try developing rubrics interactively with your students.

You can enhance students’ learning experience by involving them in the rubric development process. Either as a class or in small groups, have students decide upon criteria for grading the assignment. (These later should be reconciled in some meaningful way with the course outcomes or learning objectives.) Providing students with samples of exemplary work would help. Your role in this activity is a facilitator, guiding the students toward the final goal of a rubric that can be used on their assignment. This activity may result in a richer student learning experience, and students are more likely to feel more personally invested in the assignment because of their inclusion in the decision making process.

Tips on using rubrics effectively

- Develop a different rubric for each assignment. Although this takes time in the beginning, you’ll find that rubrics can be changed slightly or reused later.

- Give students a copy of the rubric when you assign the performance task. Online, you can create the rubric in Desire2Learn and make it visible to students in the assignment description.

- Rubrics need to be discussed with students to create a common understanding of expectations.

- For paper submission, require students to attach the rubric to the assignment when they hand it in. Online you will use the Desire2Learn rubric to mark each assignment by clicking performance levels.

- When you mark the assignment, circle or highlight the achieved level of performance for each criterion. This happens online with a simple mouse click.

- Include any additional comments that do not fit within the rubric’s criteria. Online you can type these into a text field available for that purpose.

- Decide upon a final grade for the assignment based on the rubric. Online, this mark is generated automatically by the system, but you can change it if you wish.

- Return the rubric with the assignment. This happens automatically online in Desire2Learn.

Some consider it better to create generic rubrics for each applicable item in your course syllabus evaluation scheme rather that a rubric for each specific assignment, because of the time-consuming nature of rubrics creation. Also, it helps students to see their improvement over time on the items of the same rubric. However, generic rubrics may not meet your goal of providing specific, detailed guidance at each performance level for each criterion for widely different assignments, all of which are important for development of knowledge and skills in your course subject area.

References

Anglin, L., Anglin, K., Schumann, P. L., & Kaliski, J. A. (2008). Improving the Efficiency and Effectiveness of Grading Through the Use of Computer-Assisted Grading Rubrics. Decision Sciences Journal of Innovative Education, 6(1).

Association for Assessment in Higher Education Assessment Resources (Scroll down to Rubrics)

Kryder, L. G. (2003). Grading for Speed, Consistency, and Accuracy. Business Communication Quarterly, 66(1).

Loveland, T. L. (2005). Writing Standards-Based Rubrics for Technology Education Classrooms. The Technology Teacher. October.

University of Minnesota. Process: Creating Rubrics.

The University of Scranton Kania School of Management Assessment Tools

University of Waterloo Centre for Teaching Excellence teaching tips, Rubrics: Useful Assessment Tools.